I can’t believe this got released and this is still happening.

This is the revolution in search of RAG (retrieval augmented generation), where the top N results of a search get fed into an LLM and reprioritized.

The LLM doesn’t understand it want to understand the current, it loads the content info it’s content window and then queries the context window.

It’s sensitive to initial prompts, base model biases, and whatever is in the article.

I’m surprised we don’t see more prompt injection attacks on this feature.

A link to the mentioned BMJ publication.

https://www.bmj.com/content/363/bmj.k5094That’s why you should always have your backpack when you board a plane (along with your towel, of course).

Thanks! The OP is a mess (at least when using Boost).

At first I was at least impressed that it came up with such a hilarious idea, but then it of course turns out (just as with the pizza glue) it just stole it from somewhere else.

“AI” as we know them are fundamentally incapable of coming up with something new, everything it spits out is a combination of someone else’s work.

I thought this was fake, but I searched Google for “parachute effectiveness” and that satirical study is at the top, and literally every single link below it is a reference to that satirical study. I have scrolled for a good minute and found no genuine answer…

Why waste money on parachutes when everyone has a backpack at home?!

&udm=14 🙏

deleted by creator

Want to find stuff on the internet? Don’t! Follow for more tips like these!

Google search results have been inferior for a lot of things for a long time now

Inferior to what? Past Google? That’s not available anymore. Hate on Google what you want, the alternatives are worse.

I use duck duck go as default, and more than half of the time I have to go to Google to find what I’m looking for.

Inferior to other search engines. I’ve had more luck finding things I want using ddg when I haven’t been able to on Google. Maybe I’ve been lucky.

I just searched “do maple trees have a tap root”, ai overview says yes. Literally all other reputable sources say no 🙄

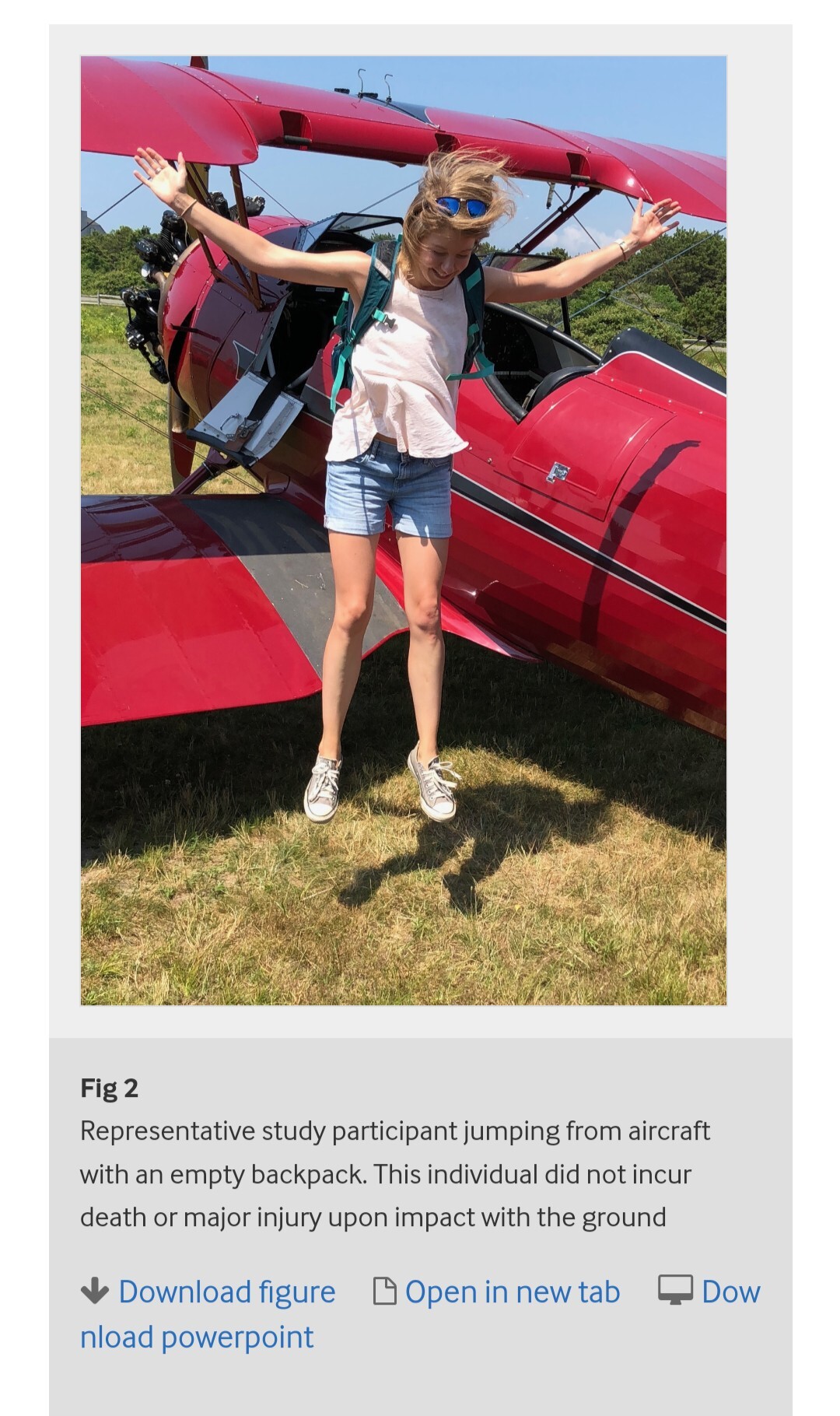

Representative study participant jumping from aircraft with an empty backpack. This individual did not incur death or major injury upon impact with the ground

Hah! I just told someone the other day that LLMs trick people into seeing intelligence basically by cold reading! At last, sweet validation!

At least in the actual Gemini chat when asked about parachute study effectiveness correctly notes this study as satire.

Weird that it actually made note of that but didn’t put it in the summary

It’s because it has no idea what is important or isn’t important. It’s all just information to it and it all carries the same weight, whether it’s “parachutes aren’t any more effective than empty backpacks” or “this study is a satire making fun of other studies that extrapolate information carefully”. Even though that second bit essentially negates the first bit.

Bet the authors weren’t expecting their joke study to hit a second time like this, demonstrating that AI is just as bad at extrapolating since it extrapolated true information from false.

It’s reckless to use these AIs in searches. If someone jokes about pretending to be a doctor and suggesting a stick of butter being the best treatment for a heart attack and that joke makes it into the training set, how would an AI have any idea that it doesn’t have the same value as some doctors talking about patterns they’ve noticed in heart attack patients?

LLMs indeed have a way of detecting satire. The same way humans do.

It’s just that now they’re like the equivalent of five year olds in terms of their ability to detect sarcasm.