Pretty incisive article, and I agree.

In retrospect, I think the marketing/sales/finance corporate leadership idiocy that’s intensified over the last couple decades is the single biggest contributor to my deep sense of frustration and ennui I’ve developed working as a software engineer. It just seems like pretty much fucking nobody in the engineering management sphere these days actually values robust, carefully and thoughtfully designed stuff anymore - or more accurately, if they do, the higher-ups will fire them for not churning out half-finished bullshit.

That’s why I like my steam deck so much: the design is so thoughtful and adapted to its own needs, and unfortunately that’s a rare sight lately (not just in technology).

Would’ve probably turned out different if Valve was beholden to shareholders and the never-ending hunger for a higher stock price. The push to drive “shareholder value” is one of the most destructive forces if not the most destructive force we’re dealing with these days.

Yeah… I’ve been thinking about popping for one for a while now. I should probably just go for it.

Out of curiosity, is the etched AR glass on the top end model actually worth it, or is that more of a gimmick?

I bought the LCD without and later switched to oled with. Honestly. I barely notice it. Go for the cheapest oled option. That is a change you will notice, unlike the the etching.

Good tip. Thanks, friend!

Just to add on to what others have already said, you can get an anti-reflective screen protector to accomplish the same task. If you think you’ll be playing in environments with a lot of light, then I’d spring for it.

You can get a screen protector that has the same effect.

Admired AMD since the first Athlon, but never made the jump for various reasons–mostly availability. Just bought my first laptop(or any computer) with an AMD chip in it last year, a ryzen7 680m. There is no discrete graphics card and the onboard GPU has comparable performance to a discrete Nvidia 1050gpu. In a 13" laptop. The AMD chip far surpassed Intel’s onboard GPU performance, and Intel laptop was ~30% more from any company. Fuck right off.

Why doesn’t this matter to Intel? Part of why they always held mind space and a near monopoly is their OEM computer maker deals. HP, DELL, etc. it was almost impossible to find an AMD premade desktop, laptops were out of the question.

I believe my first amd was a desktop athlon around 2000. I needed a fast machine to crunch my undergraduate thesis and that was the most cost effective.

In recent years I can’t buy amd for a strong desktop, went with xps and there’s no options. Linux is a requirement for me, so it narrowed down my choices a lot. As you’d expect, it’s a horrible battery life compounded by being forced to pay and not choose an NVIDIA card that also has poor drivers and power management.

x86 and it’s successor amd86 instruction set is a Pandora box and a polished turd, hiding things such as micro instructions, a full blown small OS running in parallel and independent of BIOS, and other nefarious bad practices of over engineering that is at the roots of spectre and meltdown.

What I mean is I prefer AMD over Intel, but I prefer riscv over both.

deleted by creator

Cheap intel stock going then

I’d be very surprised if they don’t find a way to bounce back, they’ve done it before

Maybe. In the past they have always been able to rely on their dominance in the PC market. With consumers shifting away from this, I don’t think it’s so straight forward and in other emerging markets like AI they are way behind.

At least they are finally putting actual money into R&D. This article was a really good read. Will be interesting to see how and if Intels investments pay off.

Yup, they need to fund engineering. That’s what AMD did, and it turns out that’s a good strategy. Companies need to provide value to customers, and then marketing’s job is easy.

I am also betting they will bounce back; hopefully this is indeed a good opportunity to buy the stock for cheap.

Intel GPUs are still ahead in some ways. They need to work on getting Intel GPUs in datacenters

I also like that they are working on creating a more open AI hardware platform

In what ways? Transcoding?

HDMI 2.1 support on Linux 😂😭

Nice read. Thanks OP!

Appreciate it 🙏.

Removed by mod

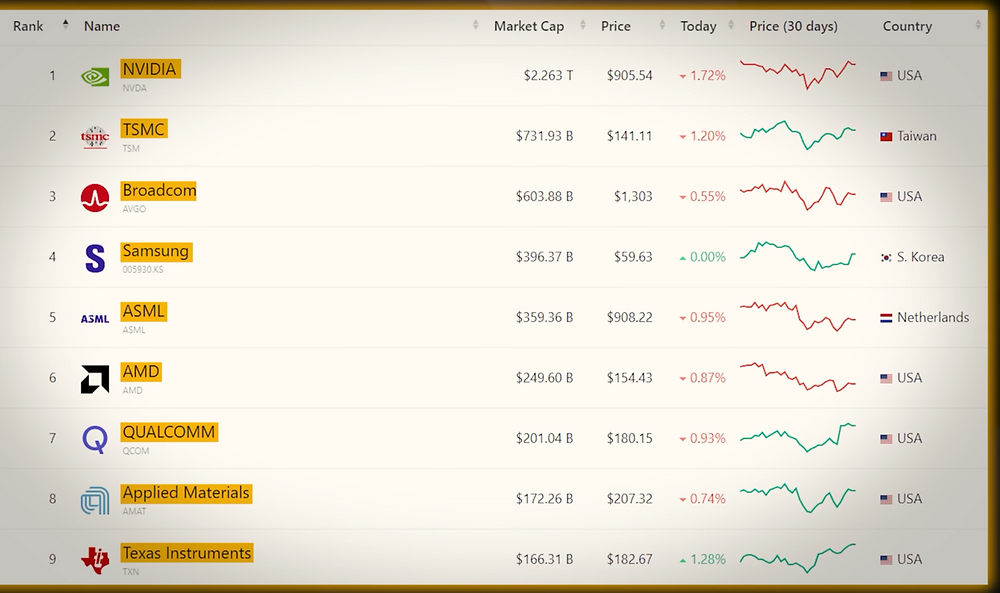

Why? AMD doesn’t make phone chips, yet they’re dominating Intel. Likewise for NVIDIA, who is at the top of the chip maker list.

The problem isn’t what market segments they’re in, the problem is that they’re not dominant in any of them. AMD is better at high end gaming (X3D chips especially), workstations (Threadripper), and high performance servers (Epyc), and they’re even better in some cases with power efficiency. Intel is better at the low end generally, by that’s not a big market, and it’s shrinking (e.g. people moving to ARM). AMD has been chipping away at those, one market segment at a time.

Intel entering phones will end up the same way as them entering GPUs, they’ll have to target the low end of the market to get traction, and they’re going to have a lot of trouble challenging the big players. Also, x86 isn’t a good fit there, so they’ll also need to break into the ARM market a well.

No, what they need is to execute well in the spaces they’re already in.

When AMD introduced the first Epyc, they marketed it with the slogan: “Nobody ever got fired for buying Intel. Until now.”

And they lived up to the boast. The Zen architecture was just that good and they’ve been improving on it ever since. Meanwhile the technology everyone assumed Intel had stored up their sleeve turned out to be underwhelming. It’s almost as bad as IA-64 vs. AMD64 and at least Intel managed to recover from that one fairly quickly.

They really need to come to with another Core if they want to stay relevant.

Actually, AMD do make phone chips. That is, they design the Exynos GPUs, which are inside some Samsung devices

Huh, TIL. It looks like they basically put Radeon cores into it.

Removed by mod

Yes, and 5 years ago, they had very little of it. I’m talking about the trajectory, and AMD seems to be getting the lion’s share of new sales.

Removed by mod

I don’t really see ARM as having an inherent advantage. The main reason Apple’s ARM chips are eating x86’s lunch is because Apple has purchased a lot of capacity on the next generation nodes (e.g. 3nm), while x86 chips tend to ship on older nodes (e.g. 5nm). Even so, AMD’s cores aren’t really that far behind Apple’s, so I think the node advantage is the main indicator here.

That said, the main advantage ARM has is that it’s relatively easy to license it to make your own chips and not involve one of the bigger CPU manufacturers. Apple has their own, Amazon has theirs, and the various phone manufacturers have their own as well. I don’t think Intel would have a decisive advantage there, since companies tend to go with ARM to save on costs, and I don’t think Intel wants to be in another price war.

That’s why I think Intel should leverage what they’re good at. Make better x86 chips, using external fabs if necessary. Intel should have an inherent advantage in power and performance since they use monolithic designs, but those designs cost more than AMD’s chiplet design. Intel should be the premium brand here, with AMD trailing behind, but their fab limitations are causing them to trail behind and jack up clock speeds (and thus kill their power efficiency) to stay competitive.

In short, I really don’t think ARM is the right move right now, unless it’s selling capacity at their fabs. What they need is a really compelling product, and they haven’t really delivered one recently…

It’s not just node, it’s also the design. If I remember properly, ARM has constant instruction length which helps a lot with caching. Anyway, Apple’s M CPUs are still way better when it comes to perf/power ratio.

Yes, it’s certainly more complicated than that, but the lithography is a huge part since they can cram more transistors into a smaller area, which is critical for power savings.

I highly doubt instruction decoding is a significant factor, but I’d love to be proven wrong. If you know of a good writeup about it, I’d love to read it.

Removed by mod

They used to, but they weren’t very good.

Removed by mod

The reason for a lack of “not so good” x86 smartphone chips isn’t some desire to sell “all the ARM shit”. Device manufacturers have to pay to license the ARM instruction set, if there was a viable alternative they would be using it.

The issue is power consumption. Look at the battery life difference between the Intel and Apple Silicon ARM MacBooks. Look at the battery life difference between an x86 and ARM Windows laptop. x86 chips run too hot and need too much power to be viable in a smartphone.

What purpose would an x86 smartphone serve anyway? Also bro you gotta chill on the ellipses.

Removed by mod

Jesus christ bro you need to fucking relax before your heart pops. If you care about openness then call for phone/tablet/laptop manufacturers to shift to RISC-V.

Nothing lasts forever, and x86 and x86_64 have lasted for eons. We will move away from x86, probably in your lifetime (unless you give yourself a coronary event which is honestly pretty likely all things considered), so you should probably come to terms with it.

I honestly can’t tell if your unhinged response is a joke or legit. If you had just tossed in ellipses liberally, in place of every single other punctuation, I would have known you were joking. With the Trumpian/boomer FB caps rant, I’m thinking maybe you’re actually serious?

Edit - You edited your comment to add more information, which is kind of a dick move. “Intel Atom” is a line of processors, not a specific processor. In trying to find the specs for their last released Mobile SoC processor, the Atom x3-C3295RK I think, Intels spec sheet doesn’t even give a TDP, they instead list a “SDP”, or “Scenario Design Power”. They didn’t publish the actual TDP of the chip that I can find, which is pretty telling. Also clocked at 1.1Ghz. The contemporary Snapdragon 835 beat that Atom chip in to the dirt.

Removed by mod

Wow you’re really doubling down on the ranting and raving huh?

While I used AMD since fx bulldozer and currently using laptop with Ryzen 7 5700u and really enjoying it, downfall of intel saddens me because they keeping the GPUs prices down, i mean, would AMD and Nvidia offer 16gb GPUs in 300$ price range if intel wouldn’t bring a770 16gb for 300$ on the table first, p.s AMD always deserved first place and still deserves it now, while intel is good as catching up player which keeping the prices down

Don’t you mean relative decline?

A decline is ALWAYS relative to something, otherwise it wouldn’t make sense. So what is it really that you mean?

Intel used to be the undisputed leader both on CPU design and production process. Those positions are both lost, Intel also always used to have huge profits, but has had deficits lately, that used to be absolutely unheard of. They have lost both their economic and technological lead and they have lost marketshare, So how is that not a decline by every measure?

Agree

Actually no. If I am standing still and people move past me, I’m not moving backwards.

Your analogy is very incomplete. No one is saying that Intel’s products or technology is “moving backwards”, but rather that their market share and performance as a company are declining.

Take your person “standing still” and imagine they were previously in the lead during a marathon and suddenly stopped before the finish line. They’re not moving backwards, but their position in the race is dropping from first, to second, to third, and they will eventually be last if they don’t start moving again.

Don’t you mean standing relatively still? /s

I tried to always use AMD, 386SX33, 486DX4/100, Duron 1000, Athlon XP 2200, then went a laptop life with Intel, but since COVID/WFH I went back to AMD, I have a 5600H in a miniPC

That has pretty much nothing to do with Intel’s decline though. Losing the enthusiast market to AMD was a small blow, the bigger blow was losing a lot of server market to AMD. And now AMD is starting to dominate in pretty much every CPU market there is, outside of the very low power devices where ARM is dominant and expanding.